Cloud, Data Lake, Big Data, AI, Streaming, devops, data protection and security...these developments in our information systems in recent years have had a strong impact on data architectures.

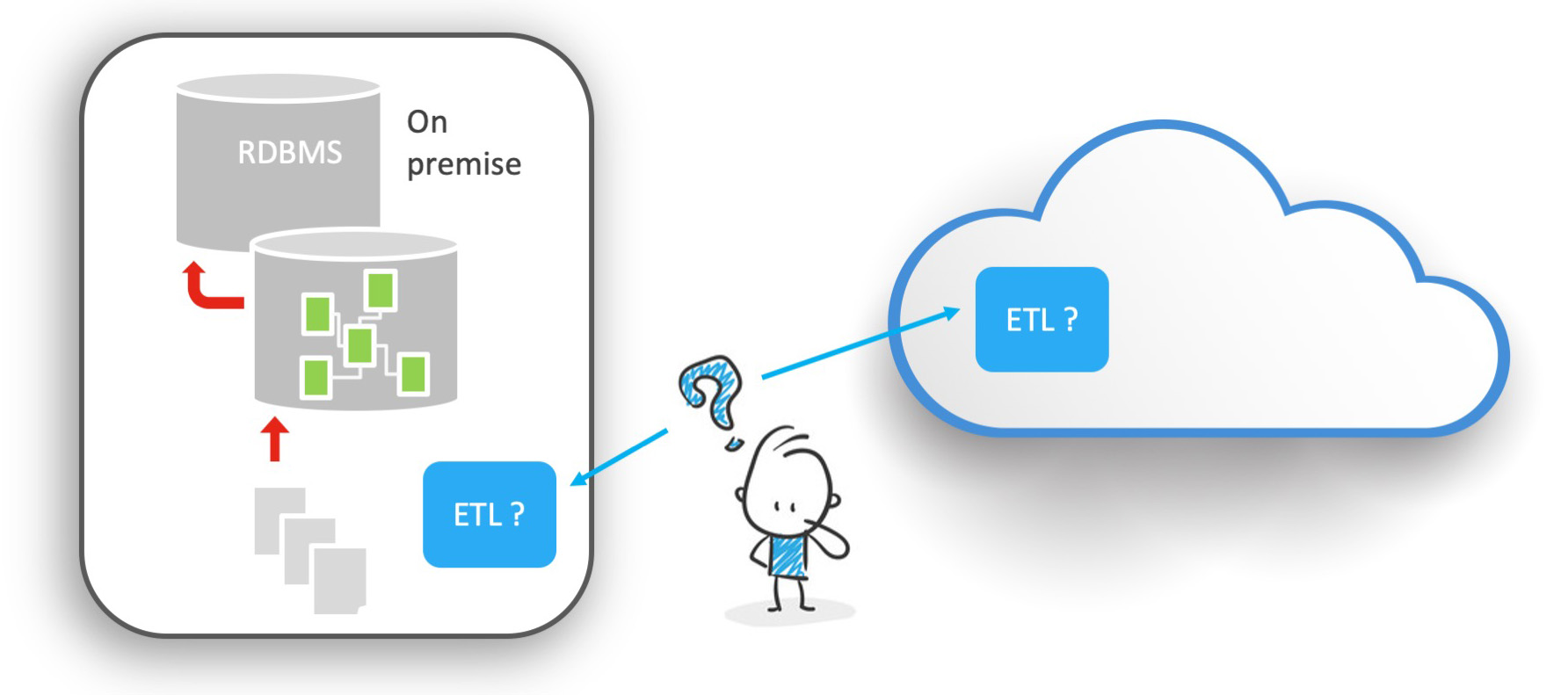

In this context, data integration must also undergo its revolution. You may still be using a traditional ETL.

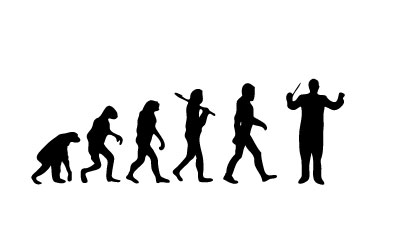

You are then a one-man band, impressive and efficient in his field, but with a limited number of instruments and capacity.

ETL is at the heart of the system and must comply with numerous requirements and incessant developments.

Its agility is put to the test, its cost challenged.

The findings are increasingly unanimous: the integration platform of the Chief Data Officer, or his equivalent, must now resemble an orchestra conductor rather than a one-man band.

The goal is not to do it all yourself, but to intelligently coordinate and optimize available resources.

This series of articles (this is the first) will aim at understanding the challenges of data integration and to measuring the impacts on the way a solution must operate on data today.

ELT versus ETL, an old debate that has been ended since a long time?

You may remember the ELT versus ETL debate that emerged in the 2000s.

It is still possible to consult traces of this type of debate on the Internet and in the press. For example, this 2005 article from BFM TV explaining the merits of a French solution at the time.

When the first ETLs (Extract Transform & Load) appeared, there were already were protesters, supporters of codeline, advocating body and soul that nothing beats a good script written by hand.

This cleavage remains current and perhaps reminds you of some heated but ongoing discussions on many other subjects beyond ETL? 😉

The ETL tool quickly became obvious, however

It made it possible to industrialize and standardize data integration processes across the entire organization and to disregard the existing Information System by proposing a unique language for transformation.

But fairly quickly, in certain configurations, the volume of data handled forced some users, followed by some editors, to offer an alternative to ETL engines.

This alternative consisted in moving the data transformation site to the target without using a proprietary engine for the transformation.

This is the famous ELT (Extract Load and Transform) approach.

In this approach, the data is loaded into the target database, then transformed and checked in the target, and no longer in an intermediate engine.

The transformation is moved from the engine to the database systems. The use of this type of architecture is still dominated today by the need for performance.

Each approach enjoying its advantages, one would have believed this debate closed for a long time.

Just like in the hardware world, where some are Mac and some are PC, in the data world, there are those who are ETL and those who are ELT. Debate closed. To each their own.

However, this did not take into account the recent evolutions in our Information Systems.

The debate was only partially closed, awaiting the first opportunity to resurface.

- What happened?

- What developments sparked a new form of debate around transformation?

- First, the emergence of the Cloud and Big Data projects.

Cloud architecture and Big Data put the ball back in the center

Cloud architecture and Big Data are putting the ball back in the center for several reasons.

According to Gartner, "by 2022, up to 60% of organizations will use service offerings managed in the cloud by an external service provider, this is double the percentage compared to 2018" *source

This implies numerous evolutions in the global architecture of the Information System and, consequently, of data flows.

First, the Cloud is pushing for the decentralization of infrastructure, and above all, data.

It is not uncommon to see information systems increasingly fragmented, with components on premise and in the Cloud.

The SAAS approach has taken many organizations to an ever-increasing silo of their applications.

One could almost think that the Cloud has killed the traditional ERP, or that the latter no longer plays such a central role in the systems…but that is another debate, perhaps for another article.

The observation is clear: the IS of the 2020s is massively decentralized! The data is dispatched into application silos, distributed around the world.

We understand that in this situation, the use of a transformation engine, by its very essence centralized, poses a first question: where are we going to put the engine? Should it be on premise? Should it be in the Cloud?

And positioning it in the Cloud only displaces the problem and brings us to another question: in which Cloud?

We are talking today about multi-cloud or Hybrid Cloud systems! It is not unusual to see Google components coexist within a single organization, with private clouds, and somewhere else proprietary SAAS applications.

A real question of architecture arises, in addition to security and cost issues, which are far from negligible.

In this context, an approach without a transformation engine (ELT) is once again positioned as an alternative.

In fact, it removes the question, both of architecture and of cost!

Also, the choice of positioning an application or a software solution in the Cloud implies at least two immediate needs: it will be necessary to migrate the data assets to this new Cloud application and it will be necessary to integrate this new application reliably and efficiently with the rest of the information system.

This raises new questions: do I continue to use my traditional ETL or EAI solution? Is it compatible? Is it sufficient? Will the ROI be there? Or do I opt for a purely cloud alternative? I would then undo the information silo of my applications, by making them communicate, but at a price: creating an information silo for the integration of data by having several solutions for very similar needs!

Again, in this context, the ELT is a real alternative.

There is no need to deploy anything new to the cloud.

Finally, outside of the Cloud, other elements have shaken up the architecture of information systems, and in turn, of data integration: Big Data, and behind it, implicitly, all data science projects, in addition to data engineering.

Indeed, by definition, these are data transformation projects. Hadoop, with its Map Reduce concept, is a way of transforming data. But then, where do we place the ETL in this case? What is its added value in a Big Data project?

Spark or Storm, aren’t these also, in essence, engines of data transformation?

We understand then, that Big Data processing algorithms ultimately naturally replace ETL.

If we wanted to summarize the subject: Spark, Google BigQuery, Snowflake, Big Table, Kafka, Flink…each of these tools is sometimes a thousand times more powerful than the ETL that you used in the 90s. Has Big Data killed the traditional ETL by making it obsolete?

This is why we had to go from one-man band to orchestra conductor. The one-man band tried to do everything, but failed to keep up. The conductor distributes, coordinates and optimizes the work of the musicians in his orchestra.

Let’s consider the integration platform as a conductor, and the power of the multiple tools of our information system as many instruments adapted to each situation.

What about ELT? It has had to reinvent itself but it is in its element, namely to generate and orchestrate code: Java, Spark, Python, even SQL specific to these environments.

Will it be there to industrialize and maximize performance? This will be the subject of our next article…

Following article coming soon: "Performance and industrialization, the key elements of the delegation of transformations"

About the Author :

Fabien BRUDER, Co-founder of Stambia since 2009.

Computer engineer EPITA, specialized in artificial intelligence, graduated in business management at Paris IAE.

After IBS France (integrator) and subsequently Sagent (publisher), he spent 7 years with the publisher Sunopsis (Oracle), as a consultant, technical director and later as a branch manager.

He has been working as an expert in the field of data integration for over 20 years..